Change Memo

NAEP 2026 Change Memo v37.docx

National Assessment of Educational Progress (NAEP) 2026 Amendment 1

Change Memo

OMB: 1850-0928

July 25, 2026

MEMORANDUM

To: Bev M. Pratt, OMB

From: Gina Broxterman and Enis Dogan, NCES

Through: Matthew Soldner, NCES

Re: National Assessment of Educational Progress (NAEP) 2026 Amendment #1 (OMB# 1850-0928 v.37)

The National Assessment of Educational Progress (NAEP), conducted by the National Center for Education Statistics (NCES), is a federally authorized survey of student achievement at grades 4, 8, and 12 in various subject areas, such as mathematics, reading, writing, science, U.S. history, and civics. It requires fair and accurate presentation of achievement data and permits the collection of background, noncognitive, or descriptive information that is related to academic achievement and aids in fair reporting of results. The intent of the law is to provide representative sample data on student achievement for the nation, the states, and subpopulations of students and to monitor progress over time. The nature of NAEP is that burden alternates from a relatively low burden in national-level administration years to a substantial burden increase in state-level administration years when the sample has to allow for estimates for individual states and some of the large urban districts.

NAEP 2026 will include:

Main NAEP Operational assessments for grades 4 and 8 (first administration of the new frameworks for reading and mathematics), grade 8 (civics and U.S. history), grades 4 and 8 mathematics in Puerto Rico (which will include the new framework), and

Main NAEP Pilot assessments in grades 4, 8, and 12 (reading and mathematics) and in Puerto Rico for grades 4 and 8 mathematics.

This Amendment #1 is a revision to the NAEP 2026 Clearance package (OMB#1850-0928 v.36). Since the 30-day Clearance Package, there were changes to the scope, which include the removal of the Field Trial. Additionally, NCES made the decision not to administer Pilot teacher and school administrator questionnaires for grade 8 mainland U.S. and grades 4 and 8 Puerto Rico. These updates are reflected in Exhibit 1 in Part A. Part A includes updates to the costs to the Federal Government (a $8,197,412 reduction in costs from the Clearance package [$129,534,907] to Amendment #1 [$121,337,495]), and updates to the burden hours (a slight increase in burden hours from the Clearance package [449,560 hours] to Amendment #1 [456,764 hours]). The slight increase in burden is the result of a discrepancy in the number of schools in the school device model, which is corrected in Exhibit 1.

In addition to scope changes, Amendment #1 updates committees in Appendix A, communication materials in Appendix D, feedback forms in Appendix E, Assessment Management System (AMS) screens in Appendix I, and Operational and Pilot survey questionnaires (SQs) in J-1 (student), J-2 (teacher), J-3 (school administrator), J-S (Spanish student, teacher, and school administrator SQs). Remaining updates to the documents for 2026 NAEP will be submitted in Amendment #2 in late summer 2025.

The following table and pages below provide a summary of the changes that are included in this submission.

Summary of All Changes

Document |

Changes |

Part A |

|

Part B |

|

Appendix A |

|

Appendix D |

|

Appendix E |

|

Appendix I |

|

Appendix J-1 |

|

Appendix J-2 |

|

Appendix J-3 |

|

Appendix J-S |

|

Part A Changes

A.1. Circumstances Making the Collection of Information Necessary

A.1.a. Purpose of Submission

The NAEP assessments contain two different types of items: “cognitive” assessment items, which measure what students know and can do in an academic subject; and “survey” or “non-cognitive” items, which gather information such as demographic variables, as well as construct-related information, such as courses taken. The survey portion includes a collection of data from students, teachers, and school administrators. Since NAEP assessments are administered uniformly using the same sets of test forms across the nation, NAEP results serve as a common metric for all states and select urban districts. The assessment stays essentially the same from year to year, with only carefully documented changes. This permits NAEP to provide a clear picture of student academic progress over time.

NAEP consists of two assessment programs: the NAEP Long-term trend (LTT) assessment and the main NAEP assessment. The LTT assessments are given at the national level only and are administered to students at ages 9, 13, and 17 in a manner that is very different from that used for the main NAEP assessments. LTT reports mathematics and reading results that present trend data since the 1970s. NAEP provides results on subject-matter achievement, instructional experiences, and school environment for populations of students e.g., all fourth-graders and groups within those populations e.g., by sex (male and female students), by race/ethnicity groups, etc. NAEP does not provide scores for individual students or schools. The main NAEP assessments report current achievement levels and trends in student achievement at grades 4, 8, and 12 for the nation and, for certain assessments (e.g., reading and mathematics), states and select urban districts. The Trial Urban District Assessment (TUDA) was a special project developed to determine the feasibility of reporting district-level results for large urban districts, which was successful and now continues to report some district scores. Currently, the following 26 districts participate in the TUDA program: Albuquerque, Atlanta, Austin, Baltimore City, Boston, Charlotte, Chicago, Clark County (NV), Cleveland, Dallas, Denver, Detroit, District of Columbia (DCPS), Duval County (FL), Fort Worth, Guilford County (NC), Hillsborough County (FL), Houston, Jefferson County (KY), Orange County (FL), Los Angeles, Miami-Dade, Milwaukee, New York City, Philadelphia, and San Diego.

The possible universe of student respondents for NAEP 2026 is estimated to be 12 million at grades 4, 8, and 12, attending the approximately 154,000 public and private elementary and secondary schools in 50 states and the District of Columbia, and Bureau of Indian Education and Department of Defense Education Activity (DoDEA) Schools, as well as fourth-grade and eighth-grade public schools in Puerto Rico.

This request is to conduct NAEP in 2026, specifically as follows:

Main NAEP operational assessments will include for grades 4 and 8 (first administration of the new frameworks for reading and mathematics), grade 8 (civics and U.S. history),

Grades 4 and 8 mathematics will be the only subject assessed in Puerto Rico, and will include the new framework, and

Main NAEP Pilot assessments will include grades 4, 8, and 12 (reading and mathematics); and grades 4 and 8 mathematics will be the only subject assessed in Puerto Rico.

Main NAEP is changing the operational assessment delivery model. While NAEP previously administered assessments on NAEP Surface Pros or Chromebooks utilizing numerous NAEP field staff, the program continues to transition to a model that is ultimately less expensive and more aligned with the administration model used in state assessments. Specifically, NAEP will administer the assessment using school devices and the internet. For schools that cannot meet the minimum specification for use of school devices, NAEP will provide an alternate delivery model utilizing NAEP Chromebooks. Additionally, to evaluate the impact of the transition to school devices, a sample of schools will be assigned to the NAEP Device Model by default, regardless of their ability to meet eligibility requirements for School Device Model.

As NAEP transitions to primarily administer on school devices, a staged approach is underway so that trends can be measured across time. Namely, NAEP has conducted a School-based Equipment study in 2024 (OMB# 1850-0803 v.347) as well as a Field Test in 2025 (OMB# 1850-0803 v.353) to provide more information about student and school interactions with the eNAEP system on school devices as compared to NAEP Chromebooks and Surface Pros and preparations for the use of school devices in operational NAEP assessments moving forward.

Some of the assessment, questionnaire, and recruitment materials are translated into Spanish. Specifically, Spanish versions of the student assessments and questionnaires are used for qualified English learner (EL) students who are eligible for a bilingual accommodation. Historically, this is done for all operational grade 4 and 8 assessments as permitted by the framework. In addition, Puerto Rican Spanish versions are offered for all students in Puerto Rico. Accordingly, Spanish versions of relevant communication materials for parents, teachers, and staff as well as teacher and school questionnaires are provided.

This is the Amendment #1 submission, which provides updates to the previous Clearance Package for 2026 NAEP. The Clearance Package included both 60-day and 30-day consecutive public comment period notices published in the Federal Register. The 60-day posting was completed in December 2024, and the 30-day posting was completed by July 2025. This package includes refinements to the scope of 2026, as well as additional materials for the 2026 assessment that were not available for the Clearance Package. These materials include additional field communications (Appendix D), additional Assessment Management System (AMS) screens (Appendix I), survey questionnaires (Appendices J1, J2, J3, J-S), and Feedback Forms (Appendix E), which are available for this 30-day public posting. An Amendment #2 to this Clearance package is planned to be submitted in the coming months to update materials, which are detailed on the following page.

NAEP 2026 Amendment Schedule Table |

|

Amendment #2 (August/September 2025) |

|

A.1.b. Legislative Authorization

The statute and regulation mandating or authorizing the collection of this information can be found at https://uscode.house.gov/view.xhtml?req=(title:20%20section:9622%20edition:prelim)%20OR%20(granuleid:USC-prelim-title20-section9622)&f=treesort&edition=prelim&num=0&jumpTo=true.

A.1.c.3. Survey Items

School Questionnaires

The school questionnaire provides supplemental information about school factors that may influence students’ achievement. It is given to the principal or another official of each school that participates in the NAEP assessment. While schools’ completion of the questionnaire is voluntary, NAEP encourages schools’ participation since it makes the NAEP assessment more accurate and complete. The school questionnaire is organized into different parts. The first part tends to cover characteristics of the school, including the length of the school day and year, school enrollment, absenteeism, dropout rates, and the size and composition of the teaching staff. Subsequent parts of the school questionnaire tend to cover policies, curricula, testing practices, special priorities, and schoolwide programs and problems. The questionnaire also collects information about the availability of resources, policies for parental involvement, special services, and community services.

Development of Survey Items

The Background Information Framework and the Governing Board’s Policy on the Collection and Reporting of Background Data (located at https://www.nagb.gov/content/nagb/assets/documents/policies/collection-report-backg-data.pdf), guide the collection and reporting of non-cognitive assessment information. In addition, subject-area frameworks provide guidance on subject-specific, non-cognitive assessment questions to be included in the questionnaires. The development process is very similar to the cognitive items, including review of the existing item pool; development of more items than are intended for use; review by experts (including the standing committee); and cognitive interviews with students, teachers, and schools. When developing the questionnaires, NAEP uses a pretesting process so that the final questions are sensitive and minimally intrusive, are grounded in educational research, and the answers can provide information relevant to the subject being assessed. All non-cognitive items undergo one-on-one cognitive interviews, which are useful for identifying questionnaire and procedural problems before larger-scale pilot testing is undertaken.

To minimize burden on the respondents and maximize the constructs addressed via the questionnaires, NAEP may spiral items across respondents and/or rotate some non-required items across assessment administrations. The possible “library” of items for the NAEP 2026 questionnaires, for each subject and respondent, are included in appendix F. The majority of the Main NAEP questionnaires are provided in this submission in Appendices J1, J2, J3, and J-S (Spanish SQ items).

A.1.c.5. Digitally Based Assessments (DBAs)

Our nation’s schools continue to make digital tools an integral component of the learning environment, reflecting the knowledge and skills needed for future post-secondary success. NAEP is reinforcing this by continuing to evolve in the changing educational landscape through leveraging the use of DBAs.

In 2026, the NAEP assessment will be administered on school devices, when possible, using the NAEP Assessment Application, also known as eNAEP. During the preassessment phase the application will be installed and confirmed on school devices. Sampled schools which do not meet the technical requirements for school devices will be provided NAEP Chromebooks that will utilize the NAEP-provided Network with the NAEP Assessment Application installed for students to complete the assessment. Additionally, in order to evaluate the impact of the transition to school devices, a sample of schools will be assigned to the NAEP Device Model by default, regardless of their ability to meet eligibility requirements for School Device Model.

Leveraging Technologies

NAEP DBAs use testing methods and item types that reflect the use of technology in education. Examples of such item types include the following:

Multimedia elements, such as video and audio clips are used in NAEP assessments. For example, the following elements are included:

Immersive reading experiences that mimic complex websites students would experience in school/general research.

Imagery that provides context-building images, such as diagrams that track and convey progress visually.

Audio - All scenario-based tasks (SBTs) use real voice actors to make the characters more real and engaging and bring in multiple modalities for increased engagement.

Interactive items and tools: Some questions may allow the use of embedded technological features to form a response. For example, students may use “drag and drop” or “click-click” functionality to place labels on a graphic or may tap an area or zone on the screen to make a selection. Other questions may involve the use of digital tools. In the mathematics DBA, an online calculator is available for students to use when responding to some items. NAEP interactive item components, such as the ruler, number line, bar graph, and various coordinate-grid-based line and point tools, expand measurement capabilities with tools comparable to how students learn about fundamental math concepts.

Immersive SBTs: SBTs use multimedia features and tools to engage students in rich, authentic problem-solving contexts. NAEP’s first scenario-based tasks were administered in 2009, when students at grades 4, 8, and 12 were assessed with interactive computer tasks in science. The science tasks asked students to solve scientific problems and perform experiments, often by simulation. Such tasks provide students more opportunities than a paper-based assessment (PBA) to demonstrate skills involved in doing science without many of the logistical constraints associated with a natural or laboratory setting. Some science tasks administered in 2019 can be explored at https://www.nationsreportcard.gov/science/sample-questions/. NAEP also administered scenario-based tasks in the 2018 technology and engineering literacy (TEL) assessment, where students were challenged to work through computer simulations of real-world situations they might encounter in their everyday lives. Sample TEL tasks can be viewed at https://www.nationsreportcard.gov/tel/tasks/. NAEP is continuing to expand the use of scenario-based tasks to measure knowledge and skills in other subject areas such as mathematics and reading. SBTs have been part of the operational reading assessment since 2019 and math operational SBTs will be administered for the first time for NAEP for 2026.

DBA technology also allows NAEP to capture information about what students do while attempting to answer questions. While PBA only yields the final responses in the test form, DBA captures actions students perform while interacting with the assessment tasks, as well as the time at which students take these actions. These student interactions with the assessment interface are not used to assess students’ knowledge and skills, but provide valuable context on item performance, time-on-tasks, and tool usage. For example, more proficient students may use digital tools such as the calculator in mathematics or the spell checker in writing assessments, compared to less proficient students. As such, NAEP will potentially uncover more information about which actions students use when they successfully (or unsuccessfully) answer specific questions on the assessment.

NAEP plans to capture the following actions in the DBA:

Student navigation (e.g., clicking back/next; clicking on the progress navigator; clicking to leave a section);

Student use of tools (e.g., zooming; using text-to-speech; opening and interactions with the scratchwork tool; opening and interactions with the calculator; using the equation editor; clicking the change language button; selecting the theme; opening the Help tool);

Student responses (e.g., clicking a choice; eliminating a choice; clearing an answer; keystroke log of student typed text);

Timing data (e.g., the time between events, which can be used to deconstruct student time spent on certain tasks);

Other student events (e.g., vertical and horizontal scrolling; media interaction such as playing an audio stimulus); and

Tutorial events (records student interactions with the tutorial practice item or not interacting with the tutorial when prompted).

Development of Digitally Based Assessments (DBAs)

NAEP’s item and system development processes include several types of activities that help to ensure our DBAs measure the subject-area knowledge and skills outlined in the NAEP frameworks and not students’ ability to use the device or the particular interface elements and digital tools included in the DBA.

During item development, new digitally based item types and tasks are studied and pretested with groups of students. The purpose of these pretesting activities is to determine whether construct-irrelevant features, such as confusing wording, unfamiliar interactivity or contexts, or other factors, prevent students from demonstrating the targeted knowledge, skills, and abilities. Such activities help identify usability, design, and validity issues so that items and tasks may be further revised and refined prior to administration.

Development of the assessment delivery system, eNAEP, is informed by best practices in accessibility and user experience design. Decisions about the availability, appearance, and functionality of system features and tools are also made based on the results of usability testing with students.

Accommodations and Universal Design Elements with DBA

Technologies are improving NAEP’s ability to provide appropriate accommodations that allow greater participation and provide universal access for all students including those with disabilities and English learners. Universal Design Elements allow for zooming and read aloud/text-to-speech for test items. These features are available for assessments excluding the reading cognitive content. In addition, students taking the assessment can choose high contrast color theming, utilize a scratchwork/highlighter tool, or eliminate answer choices.

In addition to these Universal Design Elements, NAEP also continues to provide accommodations to students with Individualized Education Programs (IEPs), Section 504 plans, or English learning plans, as described in section A.1.c.4. Some accommodations are available in the testing system (such as additional time, a magnification tool, or a Spanish/English version of the test), while others are provided by the test administrator or the school (such as breaks during testing, sign language interpretation of the test, or a bilingual dictionary). Section B.2.b. provides more information on the classification of students and the assignment of accommodations.

A.1.c.6. Assessment Types

NAEP uses four types of assessment activities, which may simultaneously be in the field during any given data collection effort. Each is described in more detail below.

Prior to pilot testing, many new items are pre-tested with small groups of sample participants (cleared under the NCES pretesting generic clearance agreement; OMB# 1850-0803). All non-cognitive items undergo one-on-one cognitive interviews, which are useful for identifying questionnaire and procedural problems before larger-scale pilot testing is undertaken. Select cognitive items also undergo pre-pilot testing, such as item tryouts or cognitive interviews, in order to test out new item types or formats, or challenging content. In addition, usability testing is conducted on new features or tools of the eNAEP system.

Special Studies

Special studies are an opportunity for NAEP to investigate specific areas of innovation without impacting the reporting of NAEP results. Previous special studies have focused on linking NAEP to other assessments or linking across NAEP same-subject frameworks, investigating the expansion of the item pool, evaluating specific accommodations, investigating administration modes, and providing targeted data on specific student populations.

Field Trial

The purpose of a field trial is to perform a dress rehearsal prior to an operational administration. The field trial is conducted with students in a real classroom environment at a small number of schools, allowing the system to be tested in the way it will be used operationally to help identify platform system or operational issues prior to an administration.

A.1.d. Overview of 2026 NAEP Assessments

The Governing Board determines NAEP policy and the assessment schedule,1 and future Governing Board decisions may result in changes to the plans represented here. Any changes will be presented in subsequent clearance packages or amendments for the current package..

The 2026 data collection will consist of the following:

Main NAEP operational assessments will include for grades 4 and 8 (first administration of the new frameworks for reading and mathematics), grade 8 (civics and U.S. history),

Grades 4 and 8 mathematics will be the only subject assessed in Puerto Rico, and will include the new framework, and

Main NAEP Pilot assessments will include grades 4, 8, and 12 (reading and mathematics); and grades 4 and 8 mathematics will be the only subject assessed in Puerto Rico.

The 2026 operational assessment will include a bridge study comparing NAEP devices and school devices to evaluate whether scores from the two different assessment modes are comparable. The NAEP program will transition operationally to assessments administered on school devices (e.g., desktops, laptops, tablets with keyboards). Schools that do not meet NAEP’s minimum technology requirements will be assessed on NAEP devices.

However, the 2026 bridge study is not as simple as comparing the two different types of schools (i.e., the school device qualified schools assessing on school devices and the schools not qualified to assess on school devices assessing on NAEP devices), given that they might have different characteristics. Therefore, some schools that qualify to be assessed on school devices will be assessed on NAEP devices. This will establish a common linking population.

A.2. How, by Whom, and for What Purpose the Data Will Be Used

In addition to contributing to the reporting tools mentioned above, data from the survey questionnaires are used as part of the marginal estimation procedures that produce the student achievement results. Questionnaire data are also used to perform quality control checks on school-reported data and in special reports, such as the Black–White Achievement Gap report (http://nces.ed.gov/nationsreportcard/studies/gaps/) and the Classroom Instruction Report in reading, mathematics, and science based on the 2015 Student Questionnaire Data (https://www.nationsreportcard.gov/sq_classroom/#mathematics).

Lastly, there are numerous opportunities for secondary data analysis because of NAEP’s large scale, the regularity of its administrations, and its stringent quality control processes for data collection and analysis. NAEP data are used by researchers and educators who have different interests and varying levels of analytical experience.

A.3. Improved Use of Technology

NAEP has continually moved to administration methods that utilize technology, as described below.

Online Teacher and School Questionnaires

The NAEP program provides the teacher and school questionnaires online through a tool known as NAEPq.

Preassessment Activities

Participating NAEP schools have designated staff members who serve as support for the NAEP assessment. Preassessment and assessment activities include functions like finalizing student samples, verifying student demographics, reviewing accommodations, responding to the School Technology Survey, installing the NAEP Assessment Application on student devices (if needed), and planning logistics for the assessment. NAEP uses an Assessment Management System (AMS) for school staff to easily provide necessary administration information through the system, which includes logistical information, updates of student and teacher information, and the completion of inclusion and accommodation information.2

NAEP Assessment Application

The current NAEP Assessment Application was first used in 2024 for the School-based Equipment Proof of Concept to allow students to access the NAEP assessment using school devices. The application is installed by school staff on school devices following the installation and validation instructions found on the eNAEP Download Center, updated with each NAEP administration cycle (see Appendix D). The application is available as a desktop shortcut on Windows devices or as a Kiosk app on the Chromebook login screen, which allows the student to launch the NAEP assessment. It conducts various checks on the device to ensure that it meets the necessary specifications so that the user can consistently interact with the NAEP assessment. The NAEP Assessment Application enables real-time assessment data transfer to NAEP Cloud servers.

Automated Scoring

NAEP administers a combination of selected-response items and open-ended or constructed-response items. In recent years, NAEP has introduced algorithmic scoring for selected-responses items that have a definable and finite number of responses, and each response can be unambiguously coded to map to a level of the scoring rubric. Algorithmic scoring is fully automated, yielding process and cost efficiencies. The volume of algorithmically-scored items continues to increase as more items are developed for DBA. NAEP uses human scorers to score the constructed-response items, using detailed scoring rubrics and proven scoring methodologies. With the increased use of technologies, the methodology and reliability of automated scoring (i.e., the scoring of constructed-response items using computer software) has advanced. While NAEP has not employed automated scoring methodologies operationally to date, these will be used in 2026 for the majority of Operational reading items. Note, the remaining Operational reading items and mathematics items will be human scored.

A.7. Consistency with 5 CFR 1320.5

In March 2024, the Office of Management and Budget (OMB) announced revisions to Statistical Policy Directive No. 15: Standards for Maintaining, Collecting, and Presenting Federal Data on Race and Ethnicity (SPD 15) and published the revised SPD15 standard in the Federal Register (89 FR 22182). The 2026 NAEP data collection described in this package continues to use race and ethnicity categories as described in the 1997 SPD 15 standards in some contexts but has moved toward compliance with the 2024 SPD 15 standards in some parts of the data collection. The plans for NAEP’s implementation of the revised SPD 15 standards for the 2026 main NAEP assessment are described below. Appendices J in this submission include data collection instruments to reflect these changes.

NAEP collects race and ethnicity data in two ways, as part of individual questionnaires and as part of the roster uploads from schools (as seen in Appendix I, “Provide Student Information”). As discussed in A.1.a, not all final materials for the 2026 assessments are available in this Amendment #1, and all final instruments will be available in Amendment #2. Below is a description of the plan for race and ethnicity items to be used in NAEP 2026.

For 2026 Grade 4 and 8 teacher respondents, NAEP will administer the revised 2024 SPD 15 Figure 1 version of the race and ethnicity item. Like many other data collections carried out online (and with the possibility that respondents will use mobile devices for response in the future) this is structured as a rollout of items. Respondents first see an item identical to Figure 3 as shown in the revised standards, followed up with breakout items based on the selected minimal categories.

The

roster data that NAEP receives from schools for the purpose of

student sampling is proxy data, reported by institutions. Because of

this, NAEP and NCES are reliant on the ability of those third-party

recordkeepers to report their data in compliance with SPD 15. NCES

and the Department of Education are currently working with the

National Assessment Governing Board (NAGB), as well as other

stakeholders, to establish timelines for compliance with the revised

standard for all school systems across the country. ED does not

anticipate that school systems will be ready to report data to NAEP

that is consistent with 2024 SPD 15 by the time of data collection

for NAEP 2026.

A.8. Consultations Outside the Agency

The NAEP assessments are conducted by a Coalition of organizations, as well as organizations that support the NAEP program, under contract with the U.S. Department of Education.

The current Coalition, and organizations that support the NAEP program, includes the following:

Management Strategies is responsible for managing the integration of multiple NAEP project schedules and providing data on timeliness, deliverables, and cost performance.

Educational Testing Service (ETS) is responsible for coordinating Coalition contractor activities, developing the assessment instruments, analyzing the data, preparing the reports, and platform development.

Sanametrix is responsible for NAEP web technology, development, operations, and maintenance including the Integrated Management System (IMS).

Pearson is responsible for scoring students’ constructed responses.

Westat is responsible for printing and distributing the assessment materials, managing field operations and data collection, and coordinating with states and districts. Westat’s responsibilities include selecting the school and student samples and weighting the samples. Westat also provides ongoing support and training for full-time NAEP State and TUDA Coordinators in states across the nation through its NAEP Support and Service Center.

In addition to the NAEP Coalition, other organizations support the NAEP program, all of which are under contract with the U.S. Department of Education. The current list of organizations includes the following:3

Manhattan Strategies Group is responsible for supporting the planning, development, and dissemination of NAEP publications and outreach activities.

State Education Agencies (SEAs) establish a liaison between the state education agency and NAEP, serve as the state’s representative to review NAEP assessment items and processes, coordinate the NAEP administration in the state, analyze and report NAEP data, and coordinate the use of NAEP results for policy and program planning.

Appendix A lists the current members of the following NAEP advisory committees:

NAEP Design and Analysis Committee

NAEP Mathematics Standing Committee

NAEP Reading Standing Committee

NAEP Survey Questionnaires Standing Committee

NAEP Mathematics Translation Review Committee

A.9. Payments or Gifts to Respondents

In general, there will be no gifts or payments to respondents, although students do get to keep the NAEP‑provided earbuds used for the DBA if they requested a pair. Some schools also offer recognition parties with pizza or other perks for students who participate; however, these are not reimbursed by NCES or the NAEP contractors. If any incentives are proposed as part of a future special study, they would be justified as part of that future clearance package. As appropriate, the amounts would be consistent with amounts approved in other studies with similar conditions.

A.10. Assurance of Confidentiality

Data security, confidentiality, and privacy protection procedures have been put in place for NAEP to ensure that all NAEP contractors and agents (see section A.8 in this document) comply with all security, confidentiality, and privacy requirements, including:

The Statements of Work of NAEP contracts;

National Assessment of Educational Progress Authorization Act (20 U.S.C. §9622);

Family Educational Rights and Privacy Act (FERPA) of 1974 (20 U.S.C. §1232(g));

Privacy Act of 1974 (5 U.S.C. §552a);

Privacy Act Regulations (34 CFR Part 5b);

Computer Security Act of 1987;

U.S.A. Patriot Act of 2001 (P.L. 107-56);

Education Sciences Reform Act of 2002 (ESRA 2002, 20 U.S.C. §9573);

Cybersecurity Enhancement Act of 2015 (6 U.S.C. §151);

The U.S. Department of Education General Handbook for Information Technology Security General Support Systems and Major Applications Inventory Procedures (March 2005);

The U.S. Department of Education Incident Handling Procedures (February 2009);

The U.S. Department of Education, ACS Directive OM: 5-101, Contractor Employee Personnel Security Screenings;

NCES Statistical Standards;

The Children’s Online Privacy Protection Act (COPPA; 15 U.S.C. §§ 6501–6506)

Furthermore, all NAEP contractors and agents will comply with the Department’s IT security policy requirements as set forth in the Handbook for Information Assurance Security Policy and related procedures and guidance, as well as IT security requirements in the Federal Information Security Management Act (FISMA), Federal Information Processing Standards (FIPS) publications, Office of Management and Budget (OMB) Circulars, and the National Institute of Standards and Technology (NIST) standards and guidance. All data products and publications will also adhere to the revised NCES Statistical Standards, as described at the website: http://nces.ed.gov/statprog/2012/. Security controls include secure data processing centers and sites; properly vetted and cleared staff; and data sharing agreements.

All assessment and questionnaire data are protected. This means that NAEP applications that handle assessment and questionnaire data

enforce effective authentication password management policies;

limit authorization to individuals who truly need access to the data, only granting the minimum necessary access to individuals (i.e., least privilege user access);

keep data encrypted, both in storage and in transport, utilizing volume encryption and transport layer security protocols;

utilize SSL certificates and HTTPS protocols for web-based applications;

limit access to data via software and firewall configurations.

The data collection process described below is based on the current handoff procedures for the current contractors.

In addition to student information, teacher and principal names are collected and recorded in the AMS online system, which is used to keep track of the distribution and collection of NAEP teacher and school questionnaires. The teacher and principal names are deleted from the AMS at the same time the student information is deleted.

Table NAEP PII Process |

|

PII is created in the following ways |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

PII is moved in the following ways |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

PII is destroyed in the following ways

|

|

|

|

|

|

In addition, parents are notified of the assessment. See appendices D-5-D-9 which provide the parental notification letters. The letters are adapted for each grade/age and subject combination and the school principal or school coordinator can download. However, the information regarding confidentiality and the appropriate law reference will remain unchanged. Please note that parents/guardians are required to receive notification of student participation, but NAEP does not require explicit parental consent (by law, parents/guardians of students selected to participate in NAEP must be notified in writing of their child’s selection prior to the administration of the assessment).

A.11. Sensitive Questions

NAEP emphasizes voluntary respondent participation. Insensitive or offensive items are prohibited by the National Assessment of Educational Progress Authorization Act, and the Governing Board reviews all items for bias and sensitivity. The nature of the questions is guided by the reporting requirements in the legislation, the Governing Board’s Policy on the Collection and Reporting of Background Data, and the expertise and guidance of the NAEP Survey Questionnaire Standing Committee (see Appendix A-4). Throughout the item development process, NCES staff works with consultants, contractors, and internal reviewers to identify and eliminate potential bias in the items.

A.12. Estimation of Respondent Reporting Burden (2026)

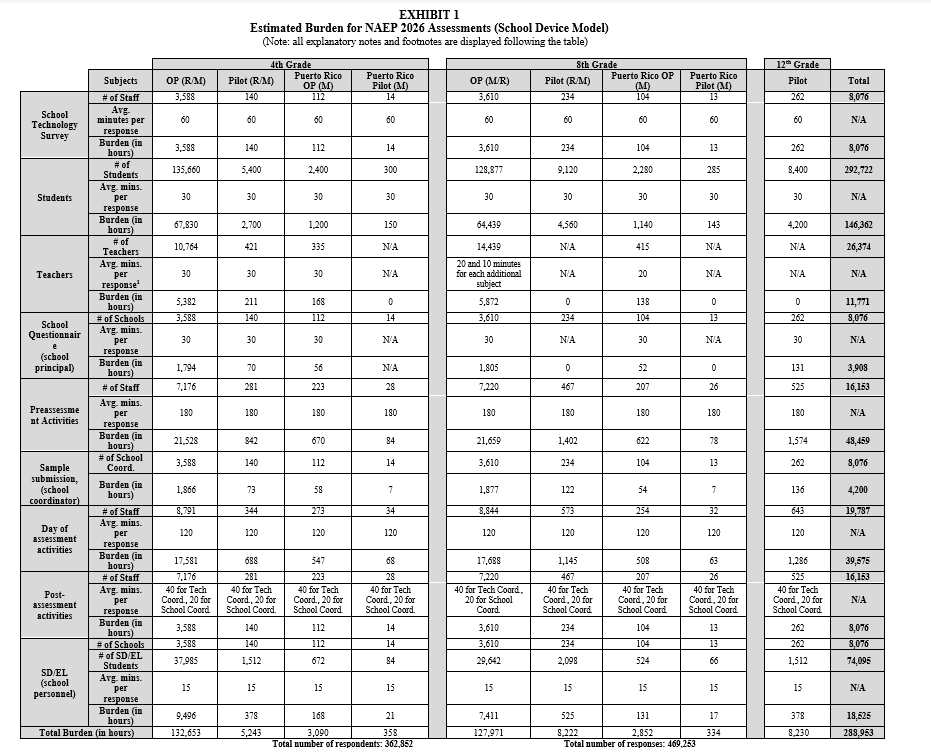

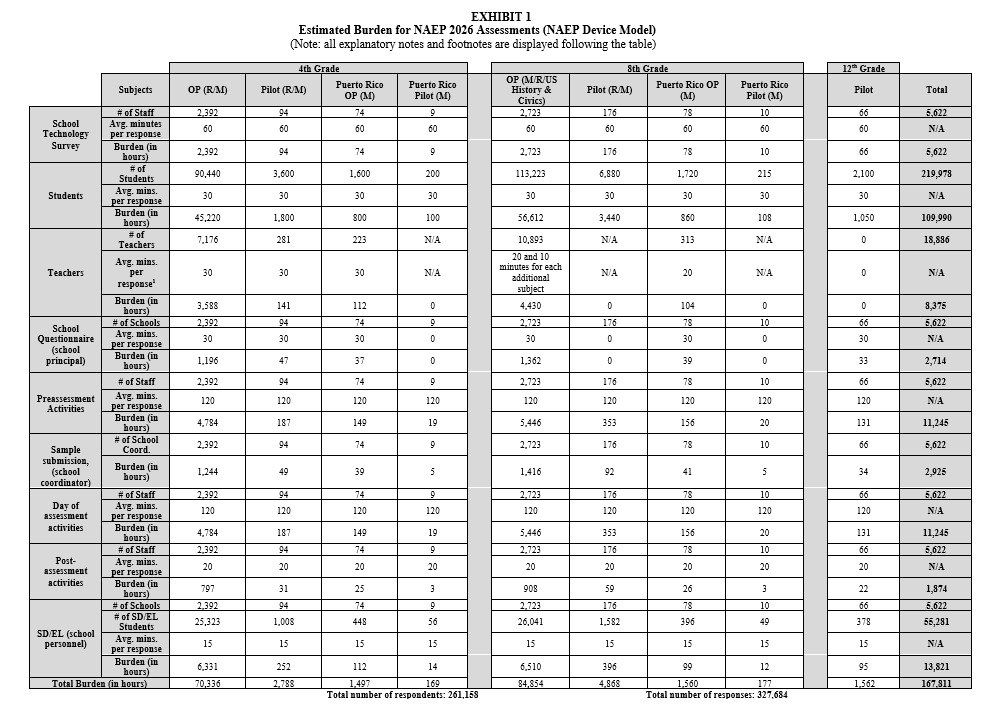

Exhibit 1 provides the burden information per respondent group, by grade, for the 2026 data collections. At the time of this submission, it is assumed that 60 percent of the sample will be in the School Device Model, and 40 percent of the sample will be in the NAEP Device Model. Exhibit 2 summarizes the burden by respondent group.

School Device Model

Teachers—The teachers of fourth- and eighth-grade students participating in main NAEP are asked to complete questionnaires about their teaching background, education, training, and classroom organization. Average fourth-grade teacher burden is estimated to be 30 minutes because fourth-grade teachers often have multiple subject-specific sections to complete. Average eighth-grade teacher burden is 20 minutes if only one subject is taught and an additional 10 minutes for each additional subject taught. Based on timing data collected from cognitive interviews, adults can respond to approximately six non-cognitive items per minute. Using this information, the teacher questionnaires are assembled so that most teachers can complete the questionnaire in the estimated amount of time. Note, Pilot SQs will not be administered for grade 8 teachers in mainland U.S., and grades 4 and 8 teachers in Puerto Rico.

Principals/Administrators—The school administrators in the sampled schools are asked to complete a questionnaire. The core items are designed to measure school characteristics and policies that research has shown are highly correlated with student achievement. Subject-specific items concentrate on curriculum and instructional services issues. The burden for school administrators is determined in the same manner as burden for teachers (see above) and is estimated to average 30 minutes per principal/administrator, although burden may vary depending on the number of subject-specific sections included. The 30-minute burden estimate includes a supplemental charter school questionnaire designed to collect information on charter school policies and characteristics and is provided to administrators of charter schools who are sampled to participate in NAEP. The supplement covers organization and school governance, parental involvement, and curriculum and offerings. Note, Pilot SQs will not be administered for grade 8 school administrators in mainland U.S., and grades 4 and 8 school administrators in Puerto Rico.

School Staff Preassessment Activities—Each school participating in main NAEP has designated staff members to support the NAEP assessment: a School Coordinator and a Technology Coordinator. Preassessment and assessment activities include functions such as finalizing student samples, verifying student demographics, reviewing accommodations, and planning logistics for the assessment. The AMS system is used by school coordinators to provide requested administration information securely online, including logistical information, updates of student and teacher information, and school logistics. Additionally, these individuals must find and prepare devices for the assessment, install the NAEP Application onto select devices, and attend an Assessment Planning Meeting (APM). More information about the Technology Coordinators’ and School Coordinators’ responsibilities is included in section B.2. Based on information collected from previous years’ use of the preassessment activities, it is estimated that it will take a total of 6 hours on average, for school personnel to complete these activities. The AMS system data will be used to inform response patterns to make further refinements to the system and to minimize burden.

NAEP Device Model

Teachers—The teachers of fourth- and eighth-grade students participating in main NAEP are asked to complete questionnaires about their teaching background, education, training, and classroom organization. Average fourth-grade teacher burden is estimated to be 30 minutes because fourth-grade teachers often have multiple subject-specific sections to complete. Average eighth-grade teacher burden is 20 minutes if only one subject is taught and an additional 10 minutes for each additional subject taught. Based on timing data collected from cognitive interviews, adults can respond to approximately six non-cognitive items per minute. Using this information, the teacher questionnaires are assembled so that most teachers can complete the questionnaire in the estimated amount of time. Note, Pilot SQs will not be administered for grade 8 teachers in mainland U.S., and grades 4 and 8 teachers in Puerto Rico.

Principals/Administrators—The school administrators in the sampled schools are asked to complete a questionnaire. The core items are designed to measure school characteristics and policies that research has shown are highly correlated with student achievement. Subject-specific items concentrate on curriculum and instructional services issues. The burden for school administrators is determined in the same manner as burden for teachers (see above) and is estimated to average 30 minutes per principal/administrator, although burden may vary depending on the number of subject-specific sections included. The 30-minute burden estimate includes a supplemental charter school questionnaire designed to collect information on charter school policies and characteristics and is provided to administrators of charter schools who are sampled to participate in NAEP. The supplement covers organization and school governance, parental involvement, and curriculum and offerings. Note, Pilot SQs will not be administered for grade 8 school administrators in mainland U.S., and grades 4 and 8 school administrators in Puerto Rico.

School Staff Preassessment Activities Preassessment—Each school participating in main NAEP has a designated staff member to serve as its NAEP school coordinator. Preassessment and assessment activities include functions such as finalizing student samples, verifying student demographics, reviewing accommodations, and planning logistics for the assessment. The AMS system was developed so that school coordinators would provide requested administration information securely online, including logistical information, updates of student and teacher information, and school logistics. This individual will also attend an Assessment Planning Meeting (APM). More information about the school coordinators responsibilities is included in section B.2. Based on information collected from previous years’ use of the preassessment system, it is estimated that it will take 2 hours on average, for school personnel to complete these activities. The AMS system data will be used to inform response patterns to make further refinements to the system and to minimize burden.

EXHIBIT 2

Total Annual Estimated Burden Time Cost for NAEP 2026 Assessments

Data Collection Year |

Number of Respondents |

Number of Responses |

Total Burden (in hours) |

2026 |

624,010 |

796,937 |

456,764 |

The estimated respondent burden across all these activities translates into an estimated total burden time cost of 456,764 hours,4 broken out by respondent group in the table below.

|

Students |

Teachers and School Staff |

Principals |

Total |

||||

|

Hours |

Cost |

Hours |

Cost |

Hours |

Cost |

Hours |

Cost |

2026 |

256,352 |

$1,858,552 |

193,790 |

$6,679,927 |

6,622 |

$351,496 |

456,764 |

|

A.13. Cost to Respondents

There are no direct costs to respondents.

A.14. Estimates of Cost to the Federal Government

The total cost to the federal government for the administrations of the 2026 NAEP data collections (contract costs and NCES salaries and expenses) is estimated to be $121,337,495. The table below represents the 2026 assessment cost estimates as of June 2025; if the scope changes, any resulting changes in the costs will be reflected in Amendment #2.

NCES salaries and expenses |

$175,038 |

|

Contract costs |

$121,162,457 |

|

Scoring |

$7,435,816 |

|

Item Development |

$20,402,959 |

|

Sampling and Weighting |

$5,016,980 |

|

Data Collection (including materials distribution) |

$60,256,259 |

|

Recruitment and State Support |

$1,093,923 |

|

Design, Analysis, and Reporting |

$10,635,838 |

|

Securing and transferring DBA assessment data |

$943,923 |

|

NAEP system development |

$15,376,759 |

|

A.15. Reasons for Changes in Burden and Budget (from last Clearance submittal)

This Amendment #1 reflects updates to the NAEP 2026 Clearance package burden and budget as a result of removing the Field Trial and NCES’ decision not to administer Pilot teacher and school administrator questionnaires for grade 8 mainland U.S. and grades 4 and 8 Puerto Rico. In addition, a discrepancy in the number of schools in the school device model was corrected. These changes resulted in a slight increase in burden hours from the initial Clearance package (449,560 hours) compared to Amendment #1 (456,764 hours).

In addition, the costs to the Federal Government have been reduced by $8,197,412 from the initial Clearance package.

A.16. Time Schedule for Data Collection and Publications

The time schedule for the data collection for the 2026 assessments is shown below.

NAEP 2026 Administration |

January 26–March 20, 2026 |

The grades 4, 8, and 12 reading and mathematics national and state results are typically released to the public around October of the same year (i.e., about 6-7 months after the end of data collection). All other operational assessments are typically released 12-15 months after the end of data collection. However, given the comparability study that compares the administration using school devices and NAEP devices, the analysis may require additional time and the results may be released later.

The operational schedule for the NAEP assessments generally follows the same schedule for each assessment cycle. The dates below show the likely timeframe for the 2026 state-level assessments. Any changes to this timeline will be provided in Amendment #2.

Spring–Summer 2025: Select the school sample and notify schools; schools and districts complete the School Technology Survey. If eligible and qualified for school devices, they can begin deploying the NAEP Assessment Application.

October–November 2025: States, districts, or schools submit the list of students.

December 2025: Select the student sample. Schools in School Device Model will complete installation of the NAEP Assessment Application.

December 2025–January 2026: Schools prepare for the assessments with support from the AMS system.

January–March 2026: Administer the assessments.

March–May 2026: Process the data, score constructed-response items and calculate sampling weights.

September–December 2026: Prepare the reports, obtaining feedback from reviewers.

January or February 2027 (Grades 4/8, reading and mathematics): Release the results.

June or July 2027 (Grade 8 U.S. history and civics): Release the results.

A.17. Approval for Not Displaying OMB Approval Expiration Date

No exception is requested.

A.18. Exceptions to Certification Statement

No exception is requested.

1 The Governing Board assessment schedule can be found at https://www.nagb.gov/about-naep/assessment-schedule.html.

2 Additional information on the AMS site is included in section B.2.

3 The current contracts expire at varying times. As such, the specific contracting organizations may change during the course of the time period covered under this submittal.

4 The average hourly earnings of teachers and principals derived from May 2023 Bureau of Labor Statistics (BLS) Occupation Employment Statistics is $34.47 for teachers and school staff and $53.08 for principals. If mean hourly wage was not provided, it was computed assuming 2,080 hours per year. The exception is the student wage, which is based on the federal minimum wage of $7.25 an hour. Source: BLS Occupation Employment Statistics, http://data.bls.gov/oes/ datatype: Occupation codes: Elementary school teachers (25-2000); Middle school teachers (25-2000); High school teachers (25-2000); Principals (11-9030); last modified date May 2023.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Modified | 0000-00-00 |

| File Created | 2025-08-05 |

© 2026 OMB.report | Privacy Policy